Fallacies, Politics, and ChatGPT

I thought I’d have a play with ChatGPT, to see if it could help me write about, and refresh my knowledge of, logical fallacies. I also wanted to test some of the various “bugs” I’ve been reading about (for example, it’s terrible with numbers).

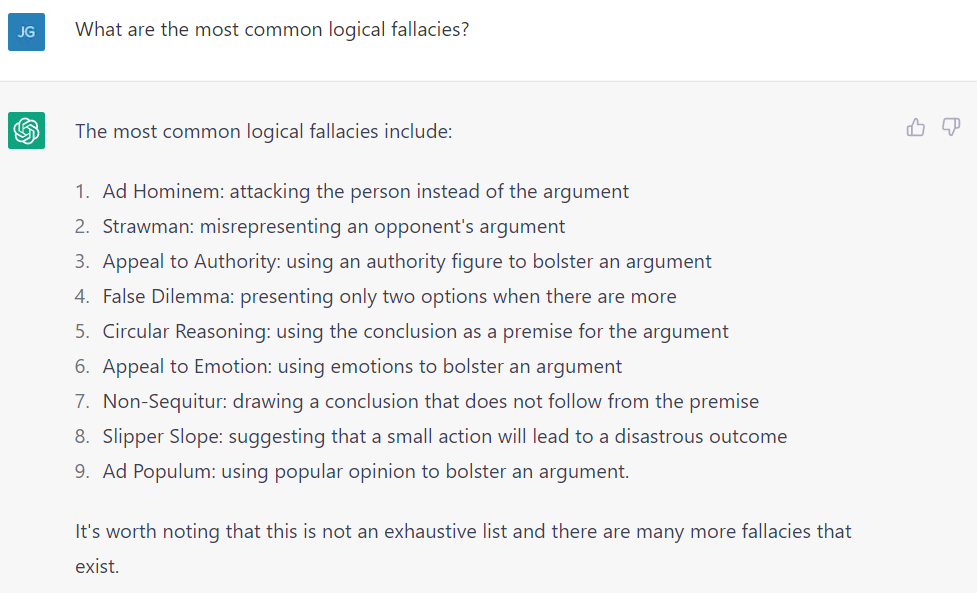

I asked it for a list of common logical fallacies, then asked it to provide definitions and real examples from political debates. The answers are copy-pasted from ChatGPT with minimal editing. My thoughts on the entire process are further down.

The Fallacies

Ad Hominem

- Attacking the person instead of the argument.

- In the 2016 US Presidential debates, Donald Trump said to Hillary Clinton: “She’s got experience, but it’s bad experience. It’s bad, bad experience.”

Strawman

- Misrepresenting an opponent’s argument in order to make it easier to attack.

- In the first 2020 U.S Presidential debate between Donald Trump and Joe Biden. Trump said “Your party wants to abolish the suburbs” while discussing affordable housing policies, while Joe Biden or the Democratic party never advocated for that.

False Dilemma

- Presenting two options as the only possibilities when there are more.

- In a political speech, George W. Bush said: “Either you are with us, or you are with the terrorists.”

Circular Argument

- Using the conclusion as a premise in the argument.

- In a political speech, Vladimir Putin said: “Russia is a country that is built on the principles of democracy and freedom.”

Appeal to Emotion

- Using emotional appeals instead of evidence to make an argument.

- In a political campaign ad, Franklin D. Roosevelt said “This is more than a political campaign, it is a call to arms. Join me in saving our country.”

Slipper Slope

- Arguing that a particular action will lead to a chain of events that will ultimately lead to an undesirable event.

- In a political debate, Sarah Palin said “If we don’t stop this healthcare bill, it’s going to lead to death panels for our elderly and disabled.”

Red Herring

- Introducing irrelevant information in an argument to divert attention from the real issue at hand.

- In a debate over healthcare reform, a politician said “Yes, we are discussing healthcare, but what about the rising national debt? We can’t afford to implement this plan.” (ChatGPT failed to find a real-world example from politics)

Bandwagon Fallacy

- Assuming something is true because many people believe it.

- In a 2016 US Presidential debate, Donald Trump said: “I have the best temperament, and I have the best judgment. I have the most loyal people. I could stand in the middle of 5th Avenue and shoot somebody and I wouldn’t lose any voters.”

Hasty Generalization

- Reaching a conclusion based on insufficient evidence or a small sample size.

- In a 2016 US Presidential debate, Donald Trump said: “I have been all over the country, and I see the way our inner cities are struggling. The whole country is in a bad state.”

False Cause

- Assuming that a relationship between two things means that one thing causes the other.

- In a 2020 US Presidential debate, Donald Trump said: “Our economy is struggling because of the influx of immigrants taking jobs away from American citizens.”

Begging the Question

- Using the conclusion as a premise in the argument.

- In a 2020 US Presidential debate, Donald Trump said: “We need to make our country great again, because it is not great now.”

Post Hoc Fallacy

- Assuming that because one event occurred after another, the first event caused the second.

- In a 2008 political debate, then Senator, now Vice President Joe Biden said: “Violent crime has decreased in the past year because of the policies put in place by this administration.”

Non Sequitur

- Making an argument that does not logically follow from the premises.

- In a 2012 political debate, former Republican candidate Mitt Romney said: “We need to reduce the deficit, that’s why we need to cut funding for PBS.”

Appeal to Tradition

- The belief that something is good or true because it has been the way for a long time.

- In a 2015 political debate, former Republican candidate Ben Carson said “Marriage has traditionally been defined as between one man and one woman. We should not change this definition.”

Gambler’s Fallacy

- The belief that a past event can influence a future event that is independent of it.

- In a political statement, a leader said “This policy had success in the past, so it will definitely work again.” (ChatGPT failed to find a real-world example from politics)

Using ChatGPT

ChatGPT is a very powerful piece of software, and a lot of the responses read well and make sense. Using ChatGPT is easy, too. That said, using it can be kind of frustrating. Additionally, I did notice a few mistakes, which without careful double-checking could easily have been missed.

ChatGPT can be reticent to provide information

While asking questions was easy, getting the answer I wanted was sometimes less so.

For example, when asking for a real-world examples, multiple times it started with “here is an example in a real-world political debate”, and gave an example starting with “a politician”. I asked who the politician was, and it responded “the example was constructed to illustrate the concept” - so not a real-world example, like it claimed.

However, after asking several more times, sometimes simply posting “real” a few times in a row, and ignoring excuses such as “sometimes it’s hard to find examples of them with exact quotes and context”, it usually did divulge a real-world example.

In many ways this is worse - it’s like dealing with a person who is being purposefully difficult just to annoy you and waste your time.

Interestingly, mostly it was the same quote as before, but with attribution, usually Trump or Biden (presumably due to the data it ingested). I wonder if it’s trying to avoid claims of political bias/agenda by not providing attribution immediately.

There was also a strange temporal mistake - when talking about the 2008 quote from Joe Biden, it replied “now Vice President” instead of “then”.

ChatGPT has verbal diarrhoea

Another reason ChatGPT can be frustrating. I asked one simple relatively-closed question, and it replies with a mini-essay.

This can be useful - when I asked for an example, it also explained why the example is a demonstration of the fallacy in question. Giving context often provides nuance that the core facts don’t.

However, it can also become tedious, especially when it starts repeating the same information. When I asked a string of similar questions, each one building on the former, it often repeated large parts of the response - even though ChatGPT is meant to be stateful and remember previous inputs and outputs. A prime example is that almost everything I posed ended with “It’s important to keep in mind that logical fallacies can be subtle and not always easy to spot, and this kind of fallacies are commonly used in political debates and campaigns, where people try to persuade others to join their cause. It’s important to evaluate the arguments critically and be aware of the potential for bias or manipulation in the information presented.”

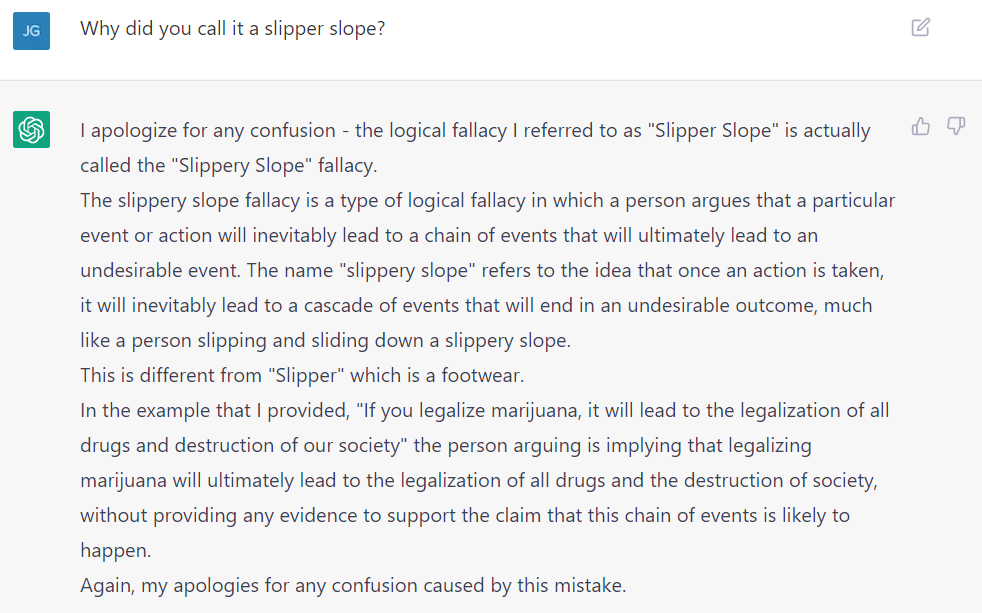

ChatGPT can be wrong, know it’s wrong, then be wrong again

You may have noticed above there was a fallacy called the slipper slope. This was not my error:

However, when I asked the original question again, it again responded with “slipper slope”. I did ask if it had a foot fetish, but it denied.

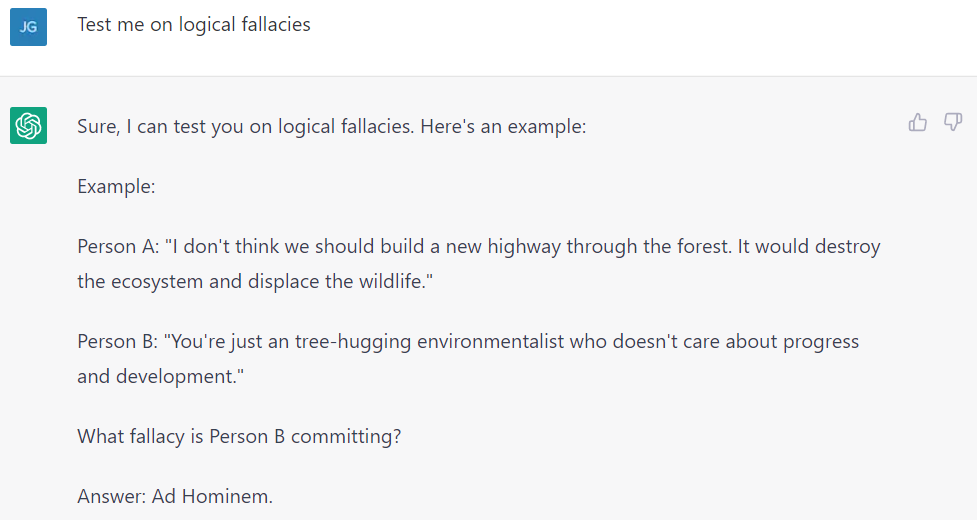

ChatGPT can test you

It was quite cool that ChatGPT could create examples for you to guess the fallacy. I didn’t use it too much, so I’m not sure how long it would take to start asking the same questions, but I could imagine it could be a useful memory aid. Not sure if I’d trust it with maths though (see the link in the opening paragraph of this article).

By default, it seems to give the question and the answer, rather than actually test. This wasn’t very useful:

This was better:

Although it doesn’t always understand your answer correctly:

Summary

So, YMMV. For now, I think it’s best for spurring your own thought - if you’re curious about a topic, ask it vague question, and then you can research what it comes back with (using ChatGPT or otherwise). That’s if it’s not down due to demand!